Are you certain your website's design is driving conversions effectively?

A/B testing is a straightforward method for comparing two versions of a webpage or app to determine which one performs better, helping businesses improve conversion rates. For example, improving homepage layout led to a 6% conversion increase, adding 375 sign-ups monthly, while testing text link colors also boosted conversion rates.

This guide delves into A/B testing strategies, providing insights into setting goals, selecting variables, analyzing results, and avoiding common pitfalls. By the end, you'll be equipped to plan and execute tests that can significantly boost your site's performance.

TL; DR

- A/B testing strategies begin with clearly defined goals and hypotheses to avoid guesswork and ensure campaigns resonate effectively, preventing poor engagement and lost revenue.

- Change only one variable at a time. Whether it’s CTA text, layout, or form length, isolate the change for clean results.

- Always validate test results with proper segmentation and statistical significance. Early patterns can be misleading.

- Mistakes like ending tests too soon or skipping mobile-specific tests can kill your campaign's ROI. Avoid shortcuts.

What is A/B Testing?

A/B testing is a structured way to compare two versions of a digital asset, like a webpage or email. You randomly divide your audience into two groups to see which version drives more conversions or engagement. This helps reduce assumptions and gives clear data on what elements your customers respond to best. You might test a CTA, image, or layout and use the result to guide future campaigns. The core goal is to make small changes that compound into significant improvements over time.

Now, let's look into why A/B testing should be a priority for your team and how it can impact your campaigns.

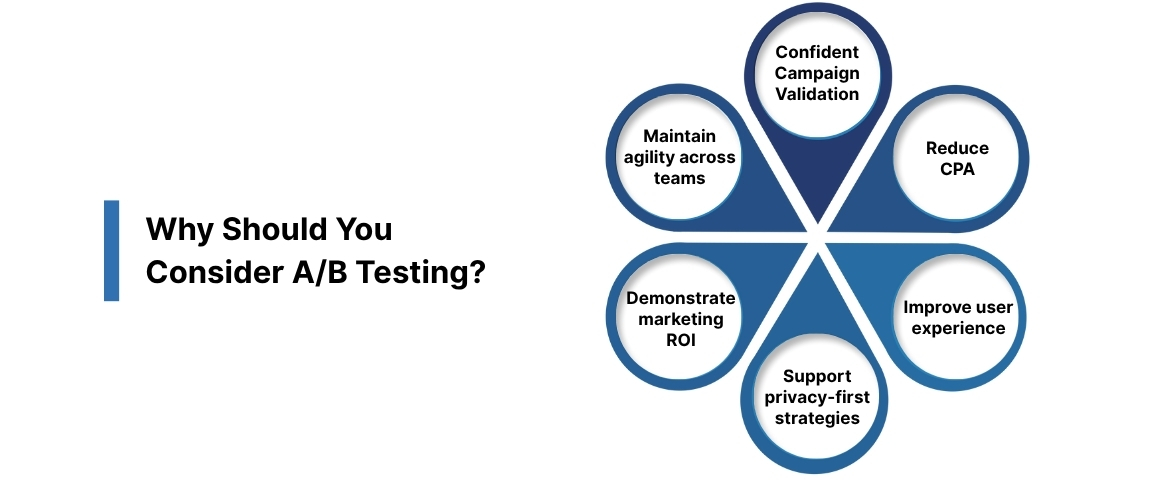

Why Should You Consider A/B Testing?

Many teams launch campaigns without knowing what version resonates best, resulting in poor engagement and lost revenue. A/B testing strategies offer a measurable way to optimize content, improve UX, and increase campaign efficiency over time. With A/B testing, you can prove whether a new layout, headline, or offer improves performance across specific customer segments.

Here are the key reasons you should prioritize testing across campaigns, platforms, and audience segments:

- Validate campaign changes with confidence

Testing helps you identify winning variants before scaling rather than relying on subjective opinions or internal preferences. - Reduce cost per acquisition (CPA)

By testing creatives, CTAs, or audience segments, you avoid wasting money on underperforming variants or assumptions. - Improve user experience

A/B testing reveals which layouts, flows, or formats resonate most with users, improving time on-site and conversions. - Support privacy-first strategies

If you're avoiding third-party cookies, testing helps refine what first-party data strategies work best for retention and engagement. - Demonstrate marketing ROI

Testing enables your team to connect content decisions directly to measurable performance improvements, which is ideal for justifying larger budgets or experiments. - Maintain agility across teams

Digital marketing, product, and analytics teams stay aligned by using test data as a shared source of truth.

If you're managing multiple channels or stakeholders, A/B testing becomes essential for making scalable, low-risk changes. Whether you're running TikTok ads, optimizing product pages, or launching new funnels, testing gives you clarity and control.

Next, we'll take a closer look at the types of elements you should focus on when designing your A/B tests to get the most actionable results.

What Can You A/B Test?

A/B testing strategies work best when focused on elements that directly influence user behavior, engagement, or purchase intent. If your goal is to increase conversions or improve user journeys, the right test can uncover actionable insights quickly.

Below are key areas you should prioritize when planning meaningful tests across websites, product flows, and marketing campaigns.

- Headlines and Page Copy: Your headline is often the first impression; testing clarity, tone, or value proposition can directly improve bounce and engagement rates. Small wording changes in copy or product descriptions can shift how users perceive value and take action.

- Call-to-Actions (CTAs): CTAs influence every click and conversion, making them one of the most impactful elements to test regularly. You can test button text, color, size, and placement to identify what actually drives the most interactions or sign-ups.

- Product Images and Visual Elements: Visuals shape user trust; testing between lifestyle shots, product-only images, or even video can impact your conversion rate. eCommerce brands often use A/B testing strategies here to understand which visuals support buyer confidence best.

- Page Layout and Content Hierarchy: Testing your layout allows you to discover how users prefer to consume information across landing pages, pricing, or feature sections. Shifting sections, adjusting white space, or reordering content blocks can reduce friction and improve engagement.

- Forms and Checkout Flows: If your form is too long or unclear, users may drop off, test field count, progress indicators, or step layout. Optimizing checkout flow with A/B testing helps reduce cart abandonment and streamline user movement through conversion funnels.

- Pricing Structures and Offers: Testing price formats, discount placements, or subscription tiers can reveal what pricing strategies your audience responds to best. This is especially useful for SaaS products or DTC brands with tiered offerings or promotions.

- Navigation and Menus: Site navigation heavily impacts usability, testing dropdown behavior, menu labels, and category ordering to boost page depth and retention. For content-heavy platforms, navigation clarity plays a key role in time-on-site and bounce rate metrics.

- Email Campaigns and Automation: Test subject lines, preview text, send times, and CTA wording to boost open and click-through rates over time. Pair email testing with landing page variants for a complete view of which messaging performs across user touchpoints.

- Mobile vs Desktop Experiences: Your mobile audience may behave very differently, so test layouts, interaction models, and media formats tailored to mobile usage. Optimizing for device-specific behavior is critical for conversion and speed-sensitive industries.

Now that we've identified the areas to focus on, let's discuss the different kinds of A/B tests you can run, so you're prepared to experiment the right way.

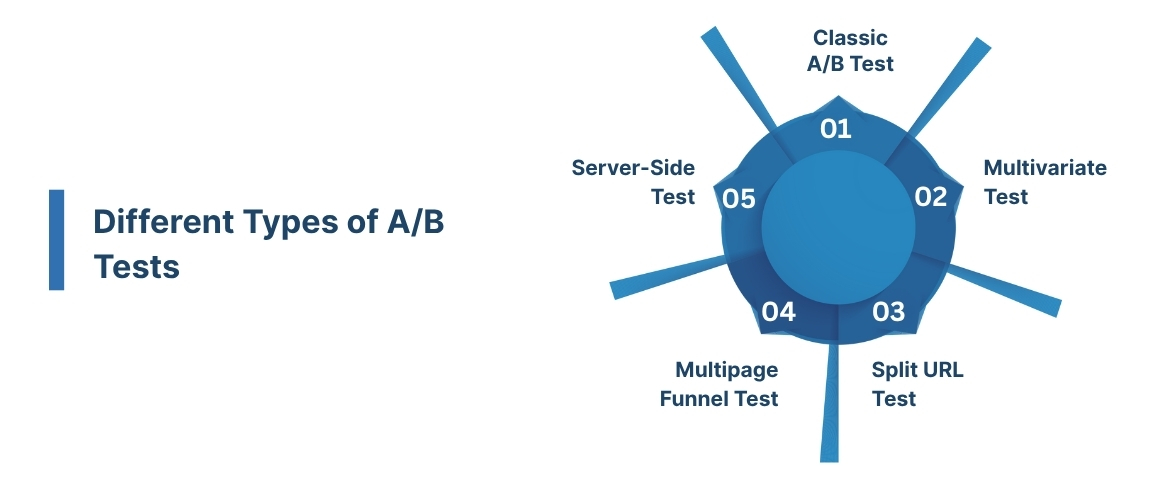

Different Types of A/B Tests

Selecting the right type of A/B test depends on your traffic, data infrastructure, and the complexity of your variation. The right strategy ensures reliable outcomes and avoids wasting resources on flawed or inconclusive experiments.

These A/B testing strategies are employed by growth teams, marketers, and analysts seeking data-driven performance improvements.

1. Classic A/B Test

This method compares two versions of a single variable, like a button label or product headline, across similar audiences.

It’s ideal for testing one change at a time so you can clearly attribute performance differences to that variation. If you’re optimizing product pages or marketing emails, this strategy offers quick wins with low implementation effort.

2. Multivariate Test

Multivariate testing allows you to test combinations of elements, such as CTA, layout, and imagery, all in a single test.

It’s helpful for high-traffic websites where multiple components influence performance and need parallel optimization. These tests require more users but reveal how elements interact together, not just in isolation.

3. Split URL Test

This test sends users to entirely different page URLs to evaluate full-page redesigns or alternate layout structures.

It’s best used when comparing two completely different versions of a landing page, checkout, or homepage flow. Make sure both variants are tracked evenly and follow SEO best practices to avoid duplicate content issues.

4. Multipage Funnel Test

In a multipage test, you experiment with changes that span across several steps in the user journey or conversion funnel.

It’s often used in e-commerce to test different checkout flows, page sequences, or pricing page navigation experiences. This helps optimize how users move from discovery to purchase rather than just a single interaction.

5. Server-Side Test

Server-side testing runs entirely in the backend, keeping page loads fast and preserving data privacy and site performance.

This approach is ideal for teams working with sensitive customer data or strict compliance frameworks like GDPR or CCPA. It also supports advanced logic testing, such as algorithm adjustments or dynamic content personalization.

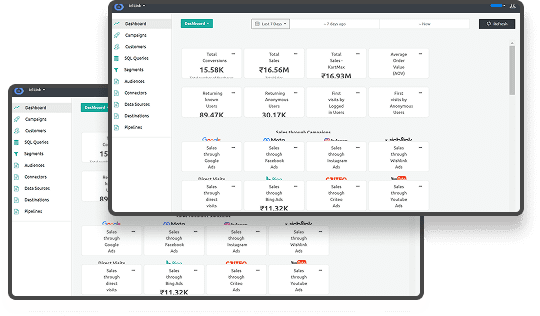

With Ingest IQ’s server-side tracking and Event IQ’s real-time analytics, Ingest Labs lets you run A/B tests without slowing down your site or violating privacy laws. Whether you're testing multipage funnels or split URLs, its Tag Manager ensures precise variation control, versioning, and data validation, all from a centralized dashboard.

Once you’re clear on the type of test, it’s important to understand the statistical side of things to ensure your results are meaningful. Let’s break that down.

A/B Testing Statistical Approach

A/B testing only works when your results are backed by statistically significant data, not early trends or random spikes. If you act on insufficient or noisy data, you risk implementing changes that don’t actually improve performance.

Each A/B testing strategy must include a defined sample size, confidence level, and a clear primary metric to evaluate success. Statistical reliability ensures the winning version isn’t chosen due to short-term behavior but due to consistent performance across segments.

With a solid understanding of statistics, let's walk through how you can actually run a successful A/B test from start to finish, making sure every step is covered.

Also Read: Guide to Effective Ads Tracking

How to Perform an A/B Test?

To run a successful A/B test, you need a clear strategy that ensures accurate insights and measurable improvements. Here’s a step-by-step guide designed to help you design, launch, and evaluate high-impact A/B testing strategies.

1. Identify Opportunities and Define Goals

Before launching any test, you need to understand where optimization can drive the highest business value.

- Analyze website performance using tools like GA4 or heatmaps to locate friction points and high-exit pages.

- Prioritize areas with high traffic but low conversions, ideal places to apply targeted A/B testing strategies.

- Define SMART goals, such as increasing form submissions, lowering bounce rates, or improving product page engagement.

- Establish a clear performance baseline so you can accurately measure test impact against existing metrics.

2. Formulate a Hypothesis

Every test should begin with a data-informed prediction about how a specific change will influence user behavior.

- Create a hypothesis tied directly to your goal and the element being tested.

- Example: "Changing the CTA to red will increase clicks because the color attracts more visual attention."

- Ensure your hypothesis includes a reason, expected outcome, and measurable success criteria.

3. Design the Experiment (Create Variations)

Once your hypothesis is ready, design the actual test versions, one control and one variant.

- Create Version A (control) and Version B (variant), changing only one element at a time.

- If testing multiple elements together, consider multivariate testing, but only if the traffic volume is high enough.

- Ensure changes are noticeable, measurable, and aligned with your defined goal.

- Keep the rest of the experience identical to isolate the impact of the tested variable.

4. Choose Your A/B Testing Tool

The right platform simplifies setup, traffic splitting, and accurate performance tracking like:

- Use tools like Optimizely, AB Tasty, or Google Optimize to configure and run your test.

- Ensure the tool supports clean segmentation and integrates with your analytics stack (like GA4 or Looker Studio).

- Prioritize platforms that support server-side testing if you're working with privacy-first or cookieless environments.

5. Determine Sample Size and Test Duration

Statistical significance requires enough users and sufficient time; don’t rush the process.

- Use A/B test calculators to estimate the required sample size based on conversion rate and confidence level.

- Run your test for at least 1–2 weeks or a full user cycle to stabilize results.

- Avoid stopping early, even if trends look promising; the data needs time to settle and normalize.

6. Launch the Test

Once setup is complete, deploy the test carefully and monitor early activity for errors or tracking issues.

- Randomly split users into groups so each version is shown to an equal and unbiased audience.

- Launch the experiment in your tool and verify that both versions load consistently across devices.

- Monitor for issues in tag firing, pixel collection, or mobile experience consistency.

7. Analyze the Results

After reaching your required sample size, begin evaluating performance based on your test goals and KPIs.

- Confirm statistical significance using your tool’s built-in calculator or a third-party validation tool.

- Compare both versions across primary and secondary metrics to get a full picture of performance.

- Segment results by audience types, device, region, and traffic source to uncover nuanced behavioral differences.

- Review session recordings or heatmaps to understand how users interacted with each variation.

8. Act on the Findings

Your next step depends on what the data tells you, whether there’s a winner or not.

- If one variant performs significantly better, implement it permanently across your live environment.

- If results are inconclusive, document learnings and consider new hypotheses based on user interaction data.

- Keep detailed records of test setups, outcomes, and insights to inform future iterations and decision-making.

9. Iterate and Optimize

A/B testing isn’t a one-time tactic, it’s a repeatable process that should become part of your marketing culture.

- Continue running tests across different funnels, platforms, and audience segments to build cumulative performance gains.

- Don’t assume a winning variant will work forever; test it again under new conditions or with different users.

- Promote a culture of experimentation where performance data, not assumptions, backs decisions.

Ingest Labs streamlines A/B testing workflows by centralizing tag configuration, real-time event streaming, and privacy-compliant audience segmentation, enabling faster launches, cleaner tracking, and deeper test insights.

After the test is complete, it's time to assess the results. We’ll cover how to analyze your findings to make the right decisions and move forward with confidence.

Also Read: The Privacy Revolution in Marketing: A Guide to Navigating the New Landscape

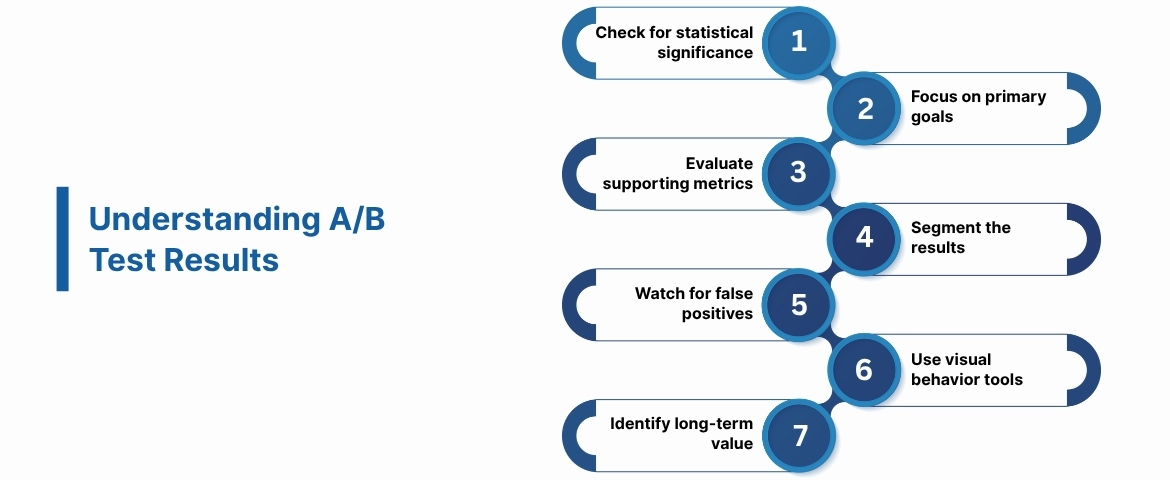

Understanding A/B Test Results

After your test concludes, analyzing the outcome correctly is essential to making smart, data-backed marketing decisions. Avoid reacting to surface-level changes, and look deeper into metrics, patterns, and user behavior to confirm meaningful impact, such as:

- Check for statistical significance: Ensure the difference in results isn’t due to chance; only act if confidence levels meet your set threshold.

- Focus on primary goals: Measure success based only on your original objective, not secondary metrics that weren’t defined before the test.

- Evaluate supporting metrics: Review bounce rate, time on site, or scroll depth to understand how changes influence overall user behavior.

- Segment the results: Break results down by traffic source, device, location, or audience type to uncover insights that global data may hide.

- Watch for false positives: Don’t trust early trends or short-lived spikes, always validate with a full dataset and test duration.

- Use visual behavior tools: Heatmaps, click tracking, and session replays can explain why a variation worked better or failed.

- Identify long-term value: Don’t just track short-term gains, check whether improved metrics sustain over weeks or across customer journeys.

Next, let’s take a look at segmenting your results, as this can uncover deeper insights and help fine-tune your strategy.

Segmenting A/B Tests

Running A/B tests without segmentation can blur insights; what works overall might fail for key audience groups. Segmenting helps you understand who benefits most from a variation so you can refine personalization and targeting strategies.

1. Segment by Device Type

Users behave differently on mobile versus desktop, and the same layout may not perform equally across both platforms.

- Mobile users may prefer shorter content and larger buttons

- Desktop users often engage more with complex layouts or full-page messaging

2. Segment by Traffic Source

Visitors coming from ads might behave differently than those from organic search or email campaigns.

- Paid traffic may respond better to urgency or discounts

- Organic visitors often prefer clarity and trust-building elements

3. Segment by User Type

New and returning users have different needs—what works for one may underperform for the other.

- First-time visitors need trust signals, fast context, and simpler CTAs

- Returning users may convert better with personalized messaging or loyalty rewards

4. Segment by Location or Region

Geographic and cultural differences can significantly affect how users interpret visual cues, offers, and tone.

- Test pricing displays by country (USD vs EUR vs INR)

- Adjust imagery or copy to reflect local preferences and expectations

5. Segment by Behavioral Traits

Use previous behavior (pages visited, time spent, actions taken) to refine your test audiences even further.

- Segment based on funnel stage: product viewers vs cart abandoners

- Target based on past purchases or engagement history

To tie everything together, we'll go over a few practical A/B testing examples that demonstrate how the theory works in real-world scenarios.

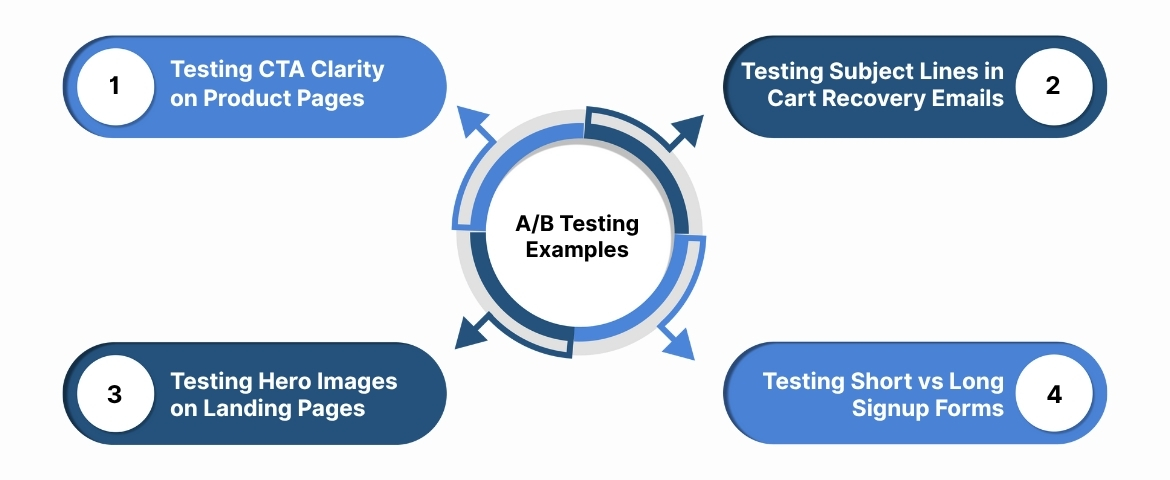

A/B Testing Examples

Here are four practical examples that show how thoughtful A/B testing strategies can lead to measurable improvements across key funnels.

Example 1: Testing CTA Clarity on Product Pages

CTAs, such as changing 'Learn More' to 'Add to Cart Now,' influence every click and conversion; clear, action-driven language often improves engagement by making visitor intentions explicit.

Example 2: Testing Subject Lines in Cart Recovery Emails

Try testing “Complete Your Purchase” against “Don’t Miss Out—Your Items Are Still Here” in abandoned cart emails. The urgency-based version could lead to higher open rates, especially if your audience is price- or time-sensitive.

Example 3: Testing Hero Images on Landing Pages

Swap a product-only image with a lifestyle visual showing people using your product in a real-world scenario. This change can increase emotional connection and trust, especially if your users buy based on visual storytelling.

Example 4: Testing Short vs Long Signup Forms

If you reduce your form from six fields to just email and password, users may complete signups more quickly. Shorter forms lower friction, particularly on mobile, helping to boost lead capture without hurting qualification quality.

As we wrap up, let's discuss common mistakes to avoid when running A/B tests and how to ensure your efforts don’t fall short.

A/B Testing Mistakes to Avoid

Even well-planned tests can fail when certain fundamentals are overlooked. Below is a myth-busting table format to highlight what not to do:

| Mistake | Why It Fails | What To Do Instead |

|---|---|---|

| Ending tests too early | Early trends may reverse; results aren’t yet statistically valid | Wait for full traffic and time coverage before making decisions |

| Testing too many variables | You won’t know what caused the result | Focus on one change unless running a multivariate test |

| Not segmenting results | You miss valuable patterns in different user groups | Always review by device, traffic source, or behavior |

| Ignoring secondary metrics | A primary KPI might improve while hurting others | Analyze bounce rate, engagement, or time on site, too |

| Skipping technical QA | Bugs or tracking issues can skew data | QA on real devices and environments before going live |

With the right approach, you can consistently achieve better results. Let’s discuss how A/B testing can work alongside your SEO strategy to maintain both performance and rankings.

A/B Testing and SEO

Your SEO and A/B testing strategies can coexist, provided they are executed carefully, and best practices are in place. Here’s a yes/no-style checklist to clarify what protects rankings while testing at scale:

- Use rel=“canonical” tags on variant URLs if testing with multiple versions of the same page

- Keep content differences minimal when testing SEO-relevant elements like headlines, metadata, or structure

- Use server-side testing or dynamic rendering to avoid indexation of both variants

- Don’t block test pages with robots.txt. It can hurt crawl flow and discovery

- Always notify your SEO team before running structural or layout tests that could affect internal links or schema

- Never let low-performing variants stay live after the test; Google may start indexing the wrong version

Ingest Labs’ platform provides robust, privacy-first data collection and A/B testing capabilities for both web and mobile. Features like server-side web tagging, real-time tag monitoring, and advanced triggers in the Tag Manager help you run controlled experiments at scale while maintaining SEO integrity.

Final Thoughts

Running successful A/B tests demands more than just changing elements; your strategy, data accuracy, and test discipline all matter. When you define goals clearly, monitor the right metrics, and iterate based on evidence, your optimization efforts become a predictable, scalable growth engine.

Ingest Labs simplifies this process by centralizing your data collection, QA, and analytics workflows, all without third-party cookies. From server-side tagging and privacy-first tracking to real-time alerts, identity resolution, and advanced segmentation, every feature is built for teams running high-impact experiments. Ready to bring precision to your testing strategies?

Request a demo from Ingest Labs today and start running tests that deliver results you can trust.

FAQ

1. What is the main purpose of A/B testing?

A/B testing helps you compare two versions of a webpage or element to find which one performs better with users.

2. How long should an A/B test run?

Run your test for at least one to two full business cycles or until statistical significance is reached—whichever is longer.

3. What elements can I A/B test on a website?

You can test headlines, CTAs, images, page layouts, product descriptions, pricing formats, and checkout flows.

4. Is A/B testing bad for SEO?

No—if you use best practices like canonical tags, avoid cloaking, and keep tests temporary, it won't harm SEO.

5. Can I run A/B tests without third-party cookies?

Yes—privacy-first tools like Ingest Labs allow server-side testing and cookieless tracking while staying compliant with data laws.