Webhooks play a central role in how modern data and marketing systems communicate, transferring events between platforms in real time. When they fail or go unmonitored, those missed events can lead to data gaps, broken attribution, and unreliable reporting.

According to Svix’s State of Webhooks report,83% of API providers now offer webhook functionality, yet adoption of standardized reliability and observability practices varies significantly across platforms. That gap creates risk for organizations that depend on accurate event delivery to power analytics, personalization, and automation.

Monitoring webhook performance helps detect delivery issues early and maintain a validated and trustworthy data flow across all systems. It gives teams the visibility needed to trust their event pipelines.

Key Takeaways:

- Webhooks monitoring helps maintain the accuracy and reliability of event data across connected systems.

- Proactive monitoring detects delivery issues early and prevents data loss, duplication, and reporting discrepancies.

- Tracking metrics like success rate, latency, and retries gives teams visibility into performance and stability.

- Integrating webhook monitoring within server-side tracking and data pipelines ensures consistent, end-to-end event integrity, including validation, deduplication, and attribution consistency.

What is a Webhook?

A webhook is a real-time event notification system that lets one platform automatically send data to another whenever a specific action occurs. Instead of polling or manually requesting updates, webhooks push information the moment an event happens, such as a purchase, form submission, subscription update, or CRM change.

A webhook typically contains:

- Trigger event: The action that initiates the request

- Payload: The data describing what happened

- Endpoint URL: The receiving system’s address

- Metadata: Headers, timestamps, or signatures used for validation

Because webhooks deliver data instantly, they are foundational to modern analytics, marketing automation, and server-side tracking. When they fail, the receiving system never learns about key events, leading to missing transactions, broken attribution, and inconsistencies across your reporting layers. That’s why monitoring webhooks is essential for any team that relies on accurate event delivery.

What is Webhooks Monitoring?

Webhooks monitoring involves observing the behavior of webhook deliveries to understand if requests are reaching their destinations successfully and how quickly they are being processed. It tracks the end-to-end journey of each event: when it was sent, how long it took to get a response, and whether the receiving endpoint acknowledged it correctly.

A monitoring system captures data points such as delivery timestamps, HTTP response codes, retries, and payload integrity checks. These metrics show whether the webhook infrastructure is stable and help teams pinpoint where errors or latency originate.

In large systems that exchange thousands of events per minute, silent failures are common. A message can appear to have been accepted but never make it to the target service because of authentication issues, timeout thresholds, or payload mismatches. Monitoring makes these failures visible so they can be addressed before they distort downstream analytics or automation.

Types of Webhook Monitoring

Webhook monitoring includes several complementary approaches that give you visibility into delivery health, latency, failures, and downstream processing. Together, they help teams detect data loss early and maintain a reliable event pipeline.

Below are the primary categories of webhook monitoring, adapted for modern data infrastructures and server-side tracking setups:

1. Delivery Monitoring

This evaluates whether each webhook request was successfully delivered and acknowledged by the receiving endpoint.

What it tracks:

- HTTP response codes

- Success vs. failure rate

- Network or DNS errors

- Closed connections or unreachable endpoints

Delivery monitoring helps teams detect broken integrations, misconfigured endpoints, expired certificates, or sudden infrastructure outages.

2. Latency Monitoring

Latency measures how long it takes for a webhook event to be sent, received, and processed. High latency often signals bottlenecks inside either the provider or the receiving service.

What it tracks:

- Response time per endpoint

- Spikes during high traffic windows

- Delays caused by retry queues or rate limiting

For data teams, latency issues directly affect the freshness and reliability of downstream analytics and attribution.

3. Retry & Queue Monitoring

Providers often retry failed deliveries, but repeated retries signal deeper reliability issues.

What it tracks:

- Retry frequency per endpoint

- Queue size and backlog behavior

- Events stuck in pending or delayed states

Queue visibility ensures that sudden traffic surges don’t silently create delivery gaps.

4. Payload Integrity Monitoring

Payload monitoring verifies that the data structure and fields of each webhook match what your systems expect.

What it checks:

- Schema validation

- Missing fields or mismatched types

- Unexpected changes in payload format

- Truncated or corrupted data

This protects downstream systems, particularly analytics and attribution pipelines, from ingesting malformed events.

5. Authentication & Security Monitoring

Most webhook systems require signature validation or token-based authentication. Missing or invalid credentials cause legitimate events to be rejected.

What it tracks:

- Signature verification failures

- Expired or invalid tokens

- Timestamp or hashing mismatches

- Unauthorized endpoint requests

This ensures that events remain secure without causing unnecessary delivery failures.

6. End-to-End Monitoring

End-to-end monitoring follows the full lifecycle of each event—from provider to ingestion layer to final data destination.

What it verifies:

- Event was delivered

- Event was processed and stored

- Event appears in analytics or server-side tracking output

- No duplication or dropped events

This type of monitoring is especially important for ensuring attribution accuracy and validating server-side event pipelines.

7. Performance & Throughput Monitoring

This evaluates how your webhook infrastructure behaves at scale.

What it tracks:

- Events per second (EPS)

- Throughput under heavy load

- Provider rate limiting thresholds

- Bottlenecks in processing or queuing

Performance trends help teams anticipate failure points before they cause data gaps.

8. Provider Reliability Monitoring

Different webhook providers vary widely in reliability. Monitoring the provider itself helps you identify infrastructure-level issues outside your control.

What it tracks:

- Provider outages or degraded delivery

- Sudden increase in 5xx responses

- Slower delivery during peak usage

- Regional reliability variations

This visibility helps teams distinguish internal issues from external disruptions.

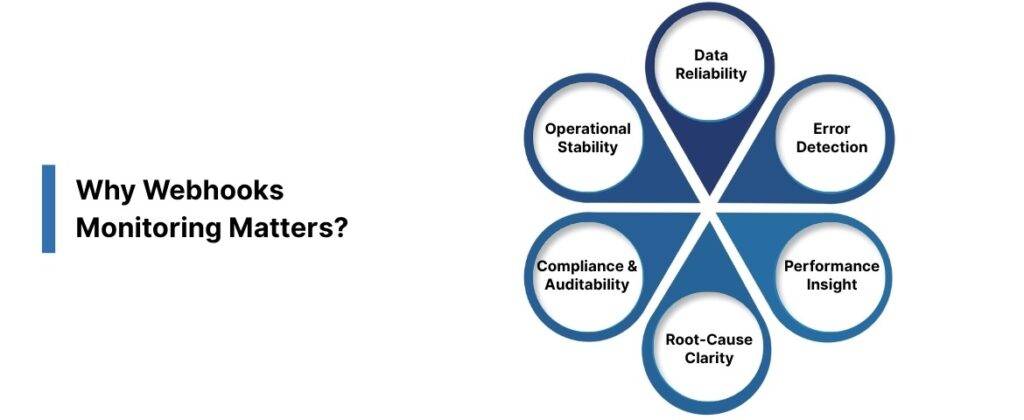

Why Webhooks Monitoring Matters?

Webhook reliability directly affects how accurately systems exchange and record data. When deliveries fail, the downstream impact can include missing transactions, broken attribution, and misleading reports.

- Data Reliability: Continuous monitoring ensures every event reaches its destination and is acknowledged, reducing undetected data loss.

- Error Detection: Identifies timeouts, retries, and bad responses early, before they distort analytics or reporting accuracy.

- Performance Insight: Tracks latency, response codes, and throughput to maintain consistent delivery during traffic spikes.

- Root-Cause Clarity: Provides the evidence needed to determine whether failures stem from authentication, payload, or infrastructure issues.

- Compliance and Auditability: Maintains detailed delivery logs that support traceability and meet privacy or governance requirements.

- Operational Stability: Strengthens confidence in automation, marketing triggers, and attribution systems that depend on event integrity.

Also Read: What is Data Tagging?

Common Webhook Failures to Watch For

Even with well-configured integrations, webhook failures occur frequently and often go unnoticed without proper monitoring. Understanding the main failure types helps teams identify where to focus detection and prevention efforts.

Key failure categories:

- Delivery Errors: Occur when the receiving endpoint returns 4xx or 5xx status codes, commonly caused by authentication failures, signature mismatches, missing resources, or temporary server issues.

- Timeouts: Happen when the target system takes too long to respond. Most webhook providers close the connection after 10–30 seconds, causing the event to fail or be retried.

- Duplicate Events: Arise when retries are triggered after a delayed acknowledgment. Without idempotency handling, these duplicates can distort analytics and downstream metrics.

- Payload Validation Issues: Result from schema mismatches or missing fields, leading to rejected requests or incomplete data ingestion.

- Network or DNS Failures: Caused by transient network outages, DNS resolution issues, TLS/certificate mismatches, or misconfigured load balancers.

- Security Rejections: Invalid signatures or expired tokens can cause legitimate requests to be rejected during verification.

Monitoring these patterns helps isolate whether issues stem from provider reliability, internal configuration, or downstream application behavior.

How to Monitor Webhooks Effectively?

Monitoring webhooks requires more than checking if requests succeed. It involves collecting the right metrics, setting thresholds for alerts, and ensuring that delivery data is complete and traceable. The following practices form the foundation of an effective monitoring approach.

- Track Delivery Metrics: Record success rates, response codes, latency, and retry counts for every endpoint. Consistent logging helps reveal trends and detect early signs of instability in your your server-side event pipeline.

- Implement Alerting Rules: Define thresholds for failure rates, latency spikes, or authentication errors.Automated real-time monitoring enables faster response and minimizes data loss.

- Validate Payload Integrity: Compare payload structures against expected schemas to prevent rejected or malformed data from entering downstream event tracking and attribution systems.

- Log End-to-End Events: capture request, response, signature verification, and latency metadata for each delivery. This creates a traceable record within your data streaming infrastructure.

- Monitor Retries and Queues: Observe how often retries are triggered and whether queue backlogs develop under load. Reliable queueing ensures continuous delivery across your server-side tracking setup.

- Test Regularly: Use sandbox environments to simulate webhook failures and verify that your monitoring and alerting system behaves as expected.

A structured monitoring process turns webhook delivery from a blind spot into an observable, measurable part of the data pipeline.

Also Read: What Data is Google Analytics Unable to Track?

Integrating Webhook Monitoring with Your Data Pipeline

Webhook monitoring works best when it’s embedded directly into the data infrastructure rather than treated as a standalone tool. Integrating monitoring with event ingestion, storage, and analytics ensures that issues are detected at the source and logged consistently across systems.

A robust setup includes:

- Centralized logging for every webhook event, including payload, response, and latency metrics.

- Automated forwarding of webhook logs to observability platforms or data warehouses for analysis.

- Alignment between webhook monitoring and server-side tracking (via Ingest IQ) to maintain a unified, schema-governed, signature-verified data flow.

- Real-time monitoring and alerting (such as Tag Monitoring & Alerts) tied into your event pipeline to catch anomalies before they affect analytics.

This approach allows teams to trace an event’s full lifecycle, from trigger to database entry, ensuring that all downstream insights are built on verified, complete data.

Final Thoughts

Webhook delivery plays a critical role in maintaining the accuracy, reliability, and attribution integrity of your data systems. When events fail, analytics, automation, and customer reporting all begin to drift from reality. Implementing structured monitoring ensures that every webhook is delivered, validated, and recorded, protecting the integrity of your data pipeline.

With Ingest Labs, you can bring full visibility and reliability to your event delivery process. Ingest IQ strengthens webhook performance through server-side tracking and real-time data validation, while Event IQ unifies visibility across platforms so every event is accounted for. Tag Monitoring & Alerts adds another layer of assurance by detecting delivery errors and latency issues before they affect reporting or automation.

These capabilities work together to create a dependable event infrastructure that supports accurate analytics, stable automation, and better business decisions.

Ready to improve webhook reliability and gain confidence in your data?

Contact Ingest Labs today to enhance your monitoring strategy and ensure every event reaches its destination.

Frequently Asked Questions (FAQs)

1. What is the purpose of webhook monitoring?

Webhook monitoring helps track the delivery and performance of webhook events between systems. It ensures that requests are successfully sent, received, and acknowledged, reducing the risk of data loss or incomplete analytics.

2. How often should teams review their webhook performance data?

Performance metrics should be reviewed continuously, with automated alerts set for anomalies. However, a full audit of webhook health, response times, and retry behavior is recommended at least once a quarter to catch long-term reliability trends.

3. What metrics are most important in webhook monitoring?

Key metrics include delivery success rate, average response time, error distribution (4xx and 5xx codes), retry frequency, and queue backlog size. Tracking these indicators provides insight into both the stability and scalability of webhook infrastructure.

4. Can webhook monitoring prevent duplicate or missed events?

Yes. By observing retries, acknowledgment times, and idempotency responses, monitoring tools can detect when events are duplicated or dropped, allowing teams to adjust retry logic or endpoint configurations before errors propagate downstream.

5. How does webhook monitoring improve data quality and attribution?

Reliable webhook delivery ensures that all conversion, transaction, and engagement events reach analytics systems in real time. This completeness improves attribution accuracy, campaign performance measurement, and overall trust in the data powering business decisions.